- Understand ODI Architecture.

- Understand Components that make up ODI.

- Understand what are ODI repositories?

Architecture Overview:

What is Oracle Data Integrator?

- Data integration product.

- ODI is a development platform. (Business Rule Driven , E-LT approach)

- Simple and faster.

- Based on Metadata – Centralized Repository.

Oracle Data Integrator is an integration platform. Simply put, it is used to move and transform information across the information system. Oracle Data Integrator is also a development platform for integration processes. It is unique in two respects:

- It uses an approach driven by business rules. In this approach, you focus your effort on the business side of integration, and not on the technical aspects.

- It uses the E-LT approach. Oracle Data Integrator does not execute the integration processes itself at run time, but orchestrates a process which leverages existing systems.

Oracle Data Integrator is based on metadata. That is, descriptive information about the information system and its contents. This metadata is stored in a centralized metadata repository. These elements combined mean that, Oracle Data Integrator AIP enables “Simply Faster Integration.

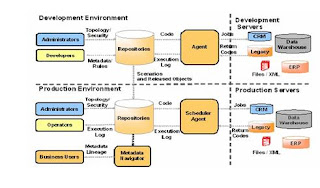

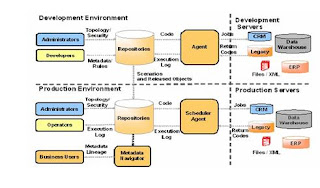

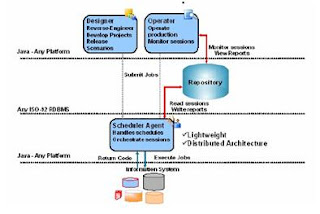

ODI Architecture

The central component of the architecture is the repository. This stores configuration information about the IT infrastructure, the metadata for all applications, projects, scenarios, and execution logs. Repositories can be installed on an OLTP relational database. The repository also contains information about the Oracle Data Integrator infrastructure, defined by the administrators.

Administrators, developers, and operators use different Oracle Data Integrator Graphical User Interfaces to access the repositories.

Security and Topology are used for administering the infrastructure, Designer is used for reverse engineering metadata and developing projects, and Operator is used for scheduling and operating run-time operations.

At design time, developers work in a repository to define metadata and business rules. The resulting processing jobs are executed by the Agent, which orchestrates the execution by leveraging existing systems. It connects to available servers and requests them to execute the code. It then stores all return codes and messages into the repository.

It also stores statistics such as the number of records processed, the elapsed time, and so on.

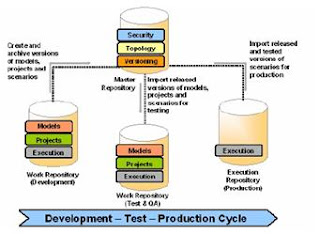

Several different repositories can coexist in a single IT infrastructure. In the graphic in the previous page, two repositories are represented: one for the development environment, and another one for the production environment. The developers release their projects in the form of scenarios that are sent to production.

In production, these scenarios are scheduled and executed on a Scheduler Agent which also stores all its information in the repository. Operators have access to this information and are able to monitor the integration processes in real time.

Business users, as well as developers, administrators and operators, can get Web-based read access to the repository. The Metadata Navigator application server links the Oracle Data Integrator Repository to any Web browser, such as Firefox or Internet Explorer

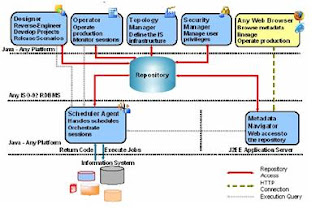

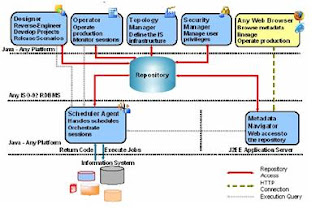

ODI Components

The four Oracle Data Integrator GUIs—Designer, Operator, Topology Manager, and Security Manager, are based on Java. They can be installed on any platform that supports Java Virtual Machine 1.4, including Windows, Linux, HP-UX, Solaris, pSeries, and so on.

Designer is the GUI for defining metadata, and rules for transformation and data quality. It uses these to generate scenarios for production, and is where all project development takes place. It is the core module for developers and metadata administrators. Operator is used to manage and monitor Oracle Data Integrator in production. It is designed for production operators and shows the execution logs with errors counts, the number of rows processed, execution statistics, and so on. At design time, developers use Operator for debugging purposes.

Topology Manager manages the physical and logical architecture of the infrastructure. Servers, schemas, and agents are registered here in the Oracle Data Integrator Master Repository. This module is usually used by the administrators of the infrastructure.

Security Manager manages users and their privileges in Oracle Data Integrator. It can be used to give profiles and users access rights to Oracle Data Integrator objects and features. This module is usually used by security administrators. All Oracle Data Integrator modules store their information in the centralized Oracle Data Integrator repository.

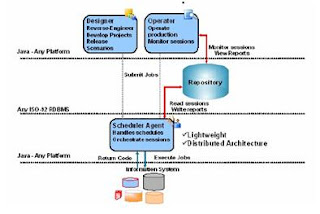

ODI Run Time Components

At run time, the Scheduler Agent orchestrates the execution of the developed scenarios. It can be installed on any platform provided that it supports a Java Virtual Machine 1.4 (Windows, Linux, HP-UX, Solaris, pSeries, iSeries, zSeries, and so on).

Execution may be launched from one of the graphical modules, or by using the built-in scheduler. Thanks to Oracle Data Integrator’ E-LT architecture, the Scheduler Agent rarely performs any transformation itself. Normally, it simply retrieves code from the execution repository, and requests database servers, operating systems or scripting engines to execute it. When the execution is completed, the scheduler agent updates logs in the repository, reporting error messages and execution statistics.

The execution log can be viewed from the Operator graphical module. It is important to understand that although it can act as a transformation engine, the agent is rarely used this way in practice. Agents are installed at tactical locations in the information system to orchestrate the integration processes and leverage existing systems. Agents are lightweight components in this distributed integration architecture

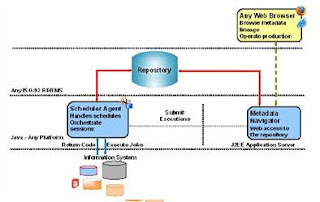

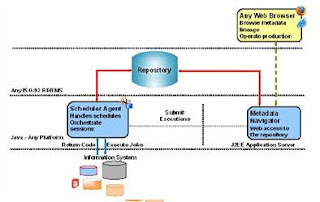

Metadata Navigator

Metadata Navigator is a J2EE application that provides Web access to Oracle Data Integrator repositories. It allows the users to navigate projects, models, logs, and so on. By default, it is installed on Jakarta Tomcat Application Server.

Business users, developers, operators and administrators use their Web browser to access Metadata Navigator. Via its comprehensive Web interface, they can see flow maps, trace the source of all data and even drill down to the field level to understand the transformations that affected the data.

It is also possible to trigger and monitor processing jobs from a Web browser through Metadata Navigator

Components – A global view

By putting these pieces together, you now have a global view of the components that make up Oracle Data Integrator: the graphical components, the repository, the Scheduler Agent, and finally Metadata Navigator.

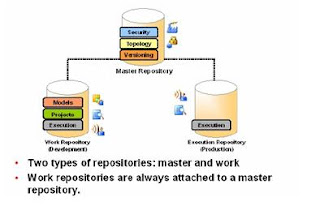

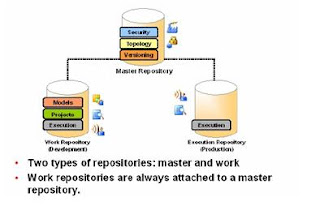

ODI Repository

The Oracle Data Integrator Repository is composed of a master repository and several work repositories. These repositories are databases stored in relational database management systems. All objects configured, developed, or used by the Oracle Data Integrator modules are stored in one of these two types of repository. The repositories are accessed in client/server mode by the various components of the Oracle Data Integrator architecture.

There is usually only one master repository, which contains the following information:

· Security information including users, profiles, and access privileges for the Oracle Data Integrator platform.

· Topology information including technologies, definitions of servers and schemas, contexts and languages.

Old versions of objects. The information contained in the master repository is maintained with Topology Manager and Security Manager. All modules access the master repository, as they all need the topology and security information stored there.

The work repository is where projects are worked on. Several work repositories may coexist in the same Oracle Data Integrator installation. This is useful, for example, to maintain separate environments or to reflect a particular versioning life cycle.

A work repository stores information for:

· Data models, which include the descriptions of schemas, data store structures and metadata, fields and columns, data quality constraints, cross references, data lineage, and so on

· Projects, which include business rules, packages, procedures, folders, knowledge modules, variables and so on

Execution, which means scenarios, scheduling information and logs

The contents of a work repository are managed with Designer and Operator. It is also accessed by the agent at run time.

When a work repository is only used to store execution information (typically for production purposes), it is called an execution repository. Execution repositories are accessed at run time with Operator and also by agents. An important rule to remember is that all work repositories are always attached to exactly one master repository

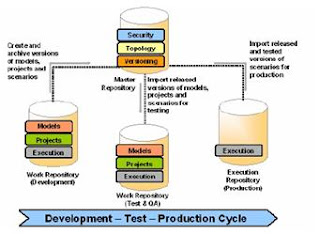

Example of Repository Setup

This diagram gives an overview of a typical repository architecture where development, testing and production are carried out in separate work repositories. When the development team finishes working on certain projects, it releases them into the master repository. The testing team imports these released versions for testing in a separate work repository, thus allowing the development team to continue working on the next versions. When the test team successfully validates the developed items, the production team then exports executable versions (called scenarios) into the final production work repository. This repository structure corresponds to a simple development-test-production cycle